I en värld där livets tempo ständigt ökar och stressnivåerna når nya höjder, har många vänt sig till mindfulness och meditation som en oas av lugn och klarhet.

Tankens kraft över kroppen

Människans tänkande har en påfallande effekt på kroppen, något som understryks genom forskning inom psykoneuroimmunologi – studien av hur psykologiska processer påverkar immunsystemet och övergripande hälsa. Ett av de mest fascinerande exemplen på detta samband är placeboeffekten.

Kreativt tänkande: Utforskning av gränslös potential

Kreativt tänkande är hjärtat av innovation och personligt uttryck, och spelar en central roll i allt från konst och musik till vetenskap och affärsstrategi. Men vad innebär det att tänka kreativt, och hur kan vi odla detta värdefulla tillstånd?

Intuition vs rationellt tänkande / Hjärta eller hjärna

I vårt beslutsfattande ställs vi ofta inför en inre kamp: ska vi följa vår magkänsla eller basera vårt beslut på logisk analys? Denna dualitet mellan intuition och rationellt tänkande har fascinerat filosofer, psykologer och författare i århundraden.

Men vilken bör vi lita på? Låt oss dyka djupt in i dessa två beslutsmetoder.

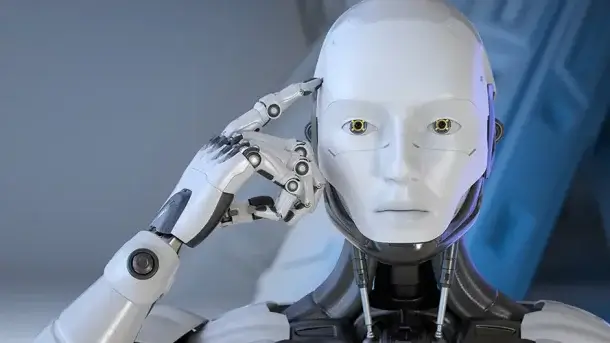

Hur AI och maskininlärning kan efterlikna eller överträffa mänskligt tänkande

Artificiell intelligens (AI) och maskininlärning (ML) har gjort stora framsteg under det senaste decenniet. Med dessa framsteg väcks frågan om huruvida AI kan efterlikna, eller till och med överträffa, kapaciteten i mänskligt tänkande.

Fortsätt läsa …

Neuroplasticitet: Hur hjärnan kan förändras och anpassas

Neuroplasticitet, även kallat hjärnplasticitet eller cerebral plasticitet, är hjärnans fantastiska förmåga att ”omorganisera” sig genom att bilda nya neurala kopplingar under hela livet. Till exempel efter en hjärnskada.

Mindfulness och meditation

I dagens snabba, hyperanslutna värld kan våra sinnen bli överväldigade av ständig stimulans och distraktion. Mindfulness och meditation erbjuder en väg till inre lugn, förbättrad koncentration och en djupare förståelse för oss själva och vår omgivning.

Tankens roll i den moderna dejtingvärlden

I en tid av dejtingappar snabba ”swipes” och digitala första intryck har datinglandskapet förändrats drastiskt. Men i centrum av denna snurrande datingkarusell ligger en oförändrad sanning: våra tankar spelar en avgörande roll i hur vi uppfattar, närmar oss och upplever kärlek och relationer.

Drömmar

Drömmar har alltid varit en mystisk och fascinerande del av den mänskliga upplevelsen. De representerar ett slags ”inre teater” där vår tankekraft spelar huvudrollen, iscensätter handlingar och avslöjar dolda delar av vårt psyke.

Tankens roll i självhjälp: Skapa en verklighet genom medvetet tänkande

Det som ofta ignoreras är att våra tankar inte bara påverkar vårt emotionella tillstånd, utan även vår fysiska kropp. Negativa tankar kan orsaka fysisk stress, vilket i sin tur kan påverka hjärthälsa, immunfunktion och mer.